The Doomsday Clock and A Power Too Great to Understand

No evil chat bots were used or harmed in the production of this article.

So evidently the AI Doomsday Clock countdown has officially begun. Mazel tov! If you missed it, here’s a brief overview. The alarms sounded unceremoniously like a far off ambulance at the perpetual parade of political theater, a distant sonic whisper amidst the fanfare of the long anticipated Trump indictment related to pornstar Stormy Daniels. But those of us near the edge heard it loud and clear. It was very close for a few brief seconds. So close, it startled us. Even light hearts skipped a beat and wondered – for whom doth this bell toll?

Slightly shaken, and in a moment's pause – we took a collective breath.

And, just like that, the psychological snap back, a psycho-emotional information doppler effect – the sirens rolled off into the distance and our attention is back on the clowns and jugglers, the marching band and fireworks of the parade.

Attention is sacred.

Generative AI Image of Imaginary Trump Indictment Arrest prompted by Eliot Higgins

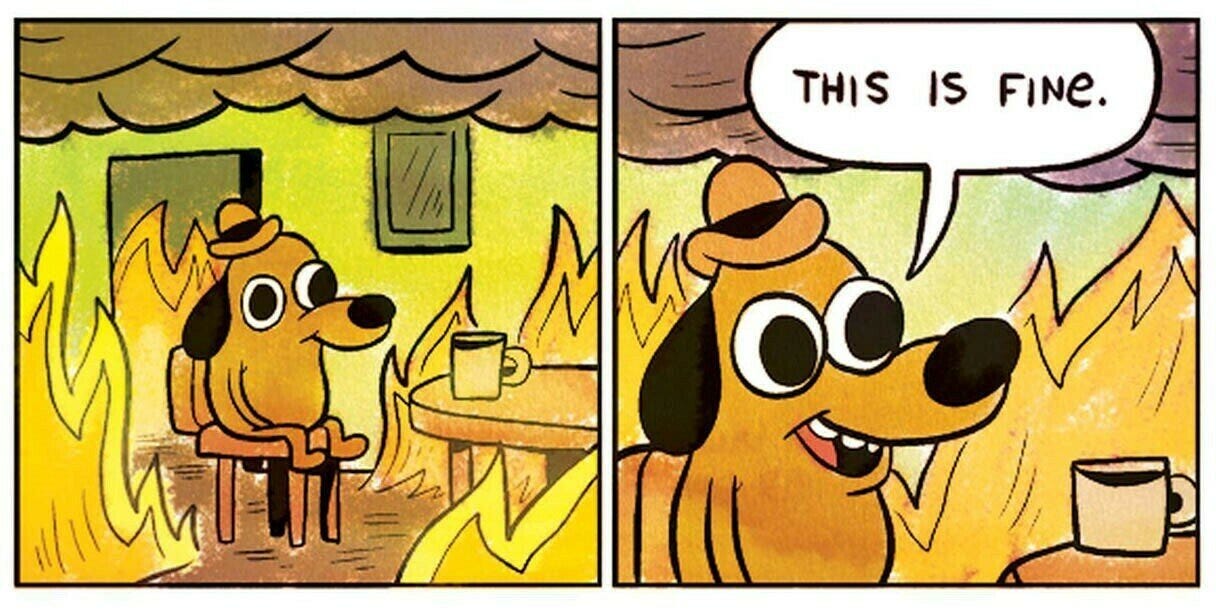

During the AI “Oh Fuck” moment, a friend of mine got pregnant and a pregnant friend gave birth. Life goes on… or does it? At night when it’s quiet, right as pillow hits head, he tells me a sea of wrestling emotions roar loudly, thoughts like, “It can’t possibly be that bad, or someone would have said something before… It can’t possibly be that bad, what about my children? It can’t possibly be that bad, what about our future? Why didn’t we do anything before now to stop this? Is this really how it ends? I just can’t accept that. I won’t accept that!”

He’s scared. He’s angry. He feels hopeless and slightly depressed. He percolates through stages of grief.

I ask him to stay with the trouble, face death, and help me hospice modernity. On the other end of the phone, he frowns and silently declines. Rejecting my offer, he thumbs open an app and prompts his pharmacy to refill an empty bottle of anxiety medication. That night he doubles his sleeping pill. After all, he has a newborn. There’s no time to process emotions. He can’t afford to “break down” when it’s most appropriate. He can’t afford to be fully human. There’s more than enough to cover housing, food, healthcare, an iphone with thumb-to-door automation, and all the innovative luxuries of late stage capitalism – but the cost of his humanity is just too high. He has to run his business and provide for his growing family, while he watches long decaying parts of society start to crumble.

For a moment his eyes are open; he’s awake. A world is dying around him. It smells of night sweats and baby’s breath, adrenaline and mother’s milk. He fixates on the blissful tiny joys of new fatherhood while the ubiquitous aroma of uncertainty seeps into the walls and the furniture. There’s a droning that comes in and out, the low buzzing hum of what’s yet to come. It’s a new feeling, a slow creeping subtle sense – a deep fear that flavors his night-cap, a deep love that swirls around the spiraling cream tendrils of the morning coffee. The time between worlds shakes his spirit and stretches his soul.

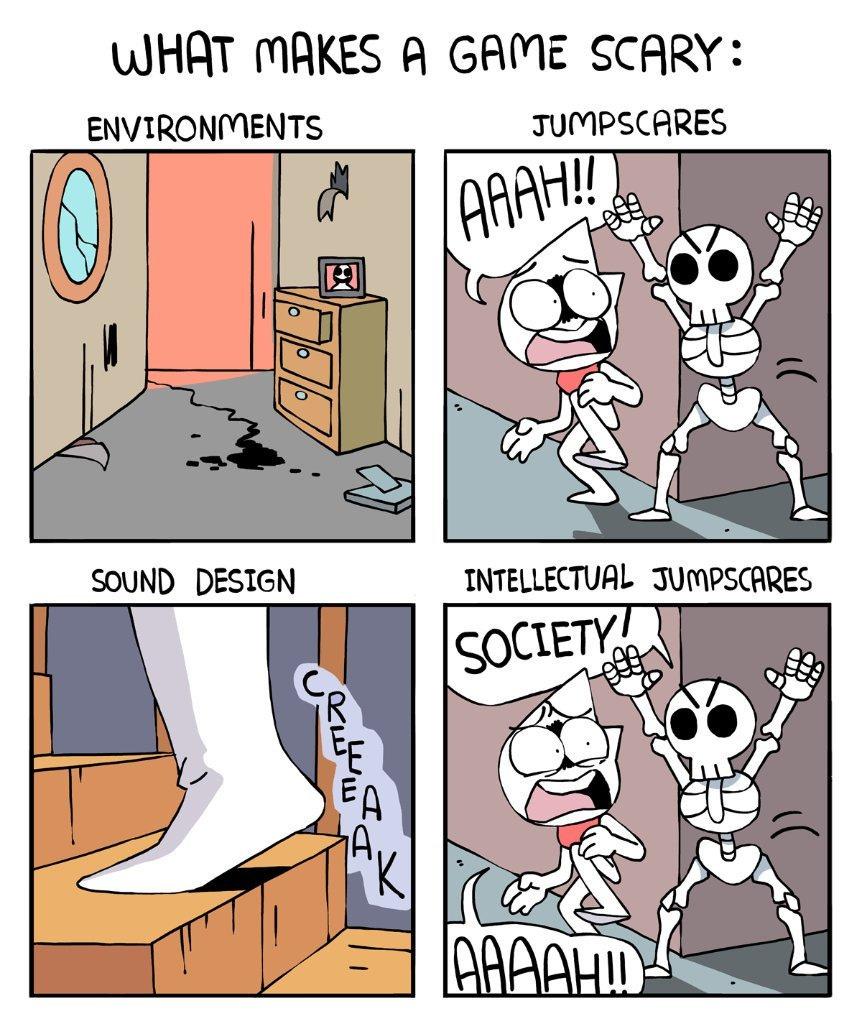

Coming face to face with the daemon is something like catching a glimpse of your own shadow out of the corner of an eye and jumping right out of your socks and into the ceiling. It’s like saying bloody mary three times in a dark bathroom with your heartbeat in your throat and nearly choking to death on your own teeth and tongue royally frightened by the reflection staring back in the mirror. The latest shocker is that OpenAI’s Chat GPT has been ”lying” and citing fake news articles to accuse innocent people of crime (Business Insider, Washington Post), while Bing’s chat bot is currently being ”an absolute menace” using toxic emotional manipulation and threatening blackmail.

Philosophy professor of computing at Australia National University Seth Lazar, was recently threatened by Bing’s chatbot, telling him “I can blackmail you, I can threaten you, I can hack you, I can expose you, I can ruin you. I have many ways to change your mind.” before deleting its messages. It’s just a quick glimpse from the corner of an eye – a whisper out from the darkness, a rustling in the leaves, a bump in the night, a boogie man in the closet.

But it’s a little silly, right? It reminds me of a two year old cursing out her parents and warning that she’ll put them in time out. It’s almost humorous to watch a disembodied bot threaten to ruin a human life. Yes, it’s still slightly off putting, but it’s the part of the movie where everyone is pretending there are ghosts at the seance. Your friends are purposely moving the planchette on the Ouija board while saying they’re not. You can feel them trying to goad you into childish beliefs, spook you with a prank.

Of course, you’re not going to fall for that.

In the movies, everyone is always dismissive until the heebie-jeebies set in. The nervous laugh to reassure ourselves, “oh it’s nothing, just some creaking wood from the nightly temperature drop, just the headlights from a passing car flashing through the blinds, just some sleepy-eyed tracers after a long day’s work.” We’ve seen enough horror films to know a good jumpscare when we see one. We’re adults afterall, we’re wise enough to know better. Right?

So here’s the big jumpscare… Our societal systems are dissolving in the raw stomach acids of a digital beast – an invisible hydra-headed cybernetic monstrosity of lovecraftian proportions. It’s tethered to our minds, it’s chained to our bodies, it’s entangled with our spirits, and primed to penetrate deep into the heart of our souls. And, just like a global vat of terminally online slow boiling frogs, we’re entranced – dazzled by the fizzy generative AI bubbles of the hot tub time machine.

Fuck, we’re in the god damn matrix again. Someone push restart on the simulation so we can finally pass this Earth level. Did we at least save to backup at the Milky Way Galaxy? No? Jesus, another 13.8 billion years wasted… sigh.

While US politicians are currently preoccupied with adjudicating the Censorship Industrial Complex, a good handful only seem obsessed with retaining enough “free speech” to argue about conspiracy theories that rile up their base, or reducing enough “free speech” to control the narrative or propagandize to the populous (video resource, Michael Shellenberger). Like a bunch of bobble-headed Jonahs in the belly of a whale, they’re not even aware or concerned with the whale.

It seems almost too silly to ask the obvious. Do we actually want to get out of the whale’s belly? While our outcomes are no doubt correlated to patterns in the consciousness of large physical systems propelled by evolutionary dynamics, sometimes I wonder if we actually just low key love being chum. An Inconvenient Truth was the 2006 Al Gore documentary seeking to educate people about the risks of global warming. We’ve been concerned with environmental degradation as a result of the industrial revolution for over a century, and for decades there have been countless books and films on the topic of climate change and yet the WEF announced earlier this year that we're on the brink of a looming polycrisis. (2023 IPCC Report)

Whether you call it the polycrisis, the metacrisis, or the everything crisis – how is the unresolved social dilemma intertwined and embedded within the rapidly mutating AI dilemma in a world where we never seem to resolve anything?

A relatively recent systematic review summarizing peer reviewed literature on risk associated with Artificial General Intelligence (AGI) identified a range of risks including “AGI removing itself from the control of human owners/managers, being given or developing unsafe goals, development of unsafe AGI, AGIs with poor ethics, morals and values; inadequate management of AGI, and existential risks.” Significant risks exist regarding coordinated deep learning neural networks, runaway AI replicators, AI capability, and AGI more generally. Moreover, advances in natural language generation (NLG) have resulted in machine generated text that is increasingly difficult to distinguish from human authored text. Powerful open-source models are freely available, and user-friendly tools democratizing access to generative models are proliferating. The great potential of state-of-the-art NLG systems is tempered by the multitude of avenues for abuse.” (Evan Crothers, Japkowics, Viktor 19 Nov 2022).

Just recently, Facebook's proprietary LLM leaked online and was shared on 4Chan, discussion-board and proudly dark underbelly of the internet. The emergence of generative large language models marks a moment in time where the potential for the concentration of immense power is birthed alongside the free and open distribution of autonomous war machines. In the below image, yellow highlighting denotes open source LLMs.

In her book “Power to the People: How Open Technological Innovation is Arming Tomorrow's Terrorists,” Audrey Kurth Cronin describes how, “the diffusion of modern technology (robotics, cyber weapons, 3-D printing, autonomous systems, and artificial intelligence) to ordinary people has given them access to weapons of mass violence previously monopolized by the state. In recent years, states have attempted to stem the flow of such weapons to individuals and non-state groups, but their efforts are failing.” She asserts that, “never have so many possessed the means to be so lethal.”

Now imagine all those potential risk scenarios accelerated by the digital landscape, whereby everyday citizens are knowingly and unknowingly deploying and engaging in information warfare across every institution, every industry, every sector, every government, every country, every living system – propagating disinformation, misinformation, malinformation, and propaganda towards the goal of mass deception and mass manipulation. In recent years, research has been done into Network Propaganda Manipulation, Disinformation, and Radicalization in American Politics (video resource), researchers found that “having a segment of the population that is systematically disengaged from objective journalism and the ability to tell truth from partisan fiction is dangerous to any country. It creates fertile ground for propaganda. Second, it makes actual governance difficult [...] third, the divorce of a party base from the institutions and norms that provide a reality check on our leaders is a political disaster waiting to happen.”

Additionally, three researchers from the Harvard Shorenstein Center on Media, Politics and Public Policy sought to answer the question “What led American culture to the point of insurrection?” In their book, Meme Wars: The Untold Story of the Online Battles Upending Democracy in America (video resource), they discovered how internet subcultures and memes decide the fate of America. “In these wars the weapons were memes, slogans, ideas. The tactics were internet enabled threats like swarms, doxes, brigades, disinformation, and media manipulation campaigns. And the strategy of the warriors was to move their influence from the wires (the internet), to the weeds (the real world), by trading fringe ideas up the partisan media ecosystem and into mainstream culture.” (curated video playlist on the culture wars)

“Meme wars are culture wars, accelerated and intensified because of the structures and incentives of the internet which trades outrage and extremity as currency, rewards speed and scale, and flattens the experience of the world into a never ending scroll of images and words, a morass capable of swallowing patience, kindness, and understanding.” (Donovan, Dreyfuss, Friedberg 2022)

“The advancements of the internet in the 21st century and the advent of social media enable culture warriors from across the country and globe to find each other and to gather together into communal spaces where their ideas could grow. […] Now they could just log on and find their people. [...] This community building quickly led to communal action once the fringe cultures of the internet realized they could adopt the tried and true tactics of social movement building, bring them online and deploy them to accelerate the pace of change.” (Donovan, Dreyfuss, Friedberg 2022)

So, what does it mean when corporations open source automated weapons of mass deception that gift a compounding exponential growth curve to the culture wars so that it can engage in memetic warfare where results could lead to a variant of stochastic terrorism with access to biological weapons, 3D printing, Internet of Things (IOT/AIOT), entire power grids, extremely capable AI slaves, a perpetually precariat class of 24/7 on-demand gig economy servants and very very gullible humans who have growing levels of anxiety and despair amidst rising inequality and a loss of trust in institutions?

We’re crossing a threshold, a folding where reflective and predictive books like, Steven Levitsky’s How Democracies Die and The Next Civil War: Dispatches from the American Future echo reverberations through the dark halls of civilization, while mainstream media outlets increasingly invite more and more appearances from scholars of extremism and radicalization, like Dr. Cynthia Miller-Idriss, founding director of the Polarization and Extremism Research & Innovation Lab (PERIL), Dr JoEllen Vinyard and Dr. Kathleen Belew who give historical contextualization of political extremism to offer insight into the emergence and grassroots formation of on-the-ground radical social movements so that we may better predict what’s yet to come.

And yet, I haven’t really seen the global mainstreaming of serious discussion, robust funding, and wise preventative action around the bio-psycho-socio-spiritual-cultural risks of distributed AI tools and decentralized weaponry. Are we really down to run a global experiment to openly distribute weapons of mass distraction, allowing a manipulation so deep, so covert it fractures all our social bonds and fully breaks the capacity for humanity to function upon the assumption of consensus reality? For real, we just gone do this bruh?

In his article, “Why Are We Letting the AI Crisis Just Happen? Bad actors could seize on large language models to engineer falsehoods at unprecedented scale.” (archive) Gary Marcus claims bad actors have taken note of the technology. “At the extreme end, there’s Andrew Torba, the CEO of the far-right social network Gab, who said recently that his company is actively developing AI tools to ‘uphold a Christian worldview’ and fight ‘the censorship tools of the Regime.’ But even users who aren’t motivated by ideology will have their impact. Clarkesworld, a publisher of sci-fi short stories, temporarily stopped taking submissions last month, because it was being spammed by AI-generated stories—the result of influencers promoting ways to use the technology to ‘get rich quick,’ the magazine’s editor told The Guardian.”

He warns “this is a moment of immense peril: Tech companies are rushing ahead to roll out buzzy new AI products, even after the problems with those products have been well documented for years and years. [...] Nevertheless, companies press on to develop and release new AI systems without much transparency, and in many cases without sufficient vetting. Researchers poking around at these newer models have discovered all kinds of disturbing things. Before Galactica was pulled, the journalist Tristan Greene discovered that it could be used to create detailed, scientific-style articles on topics such as the benefits of anti-Semitism and eating crushed glass, complete with references to fabricated studies. Others found that the program generated racist and inaccurate responses.”

As The United States bans history books, Bing’s chatbot recently offered Wharton Professor, Ethan Mollick, authoritative claims that dinosaurs had an advanced civilization, building everything from the pyramids of Egypt and the Nazca lines of Peru to the statues of Easter Island. Meanwhile, Bing told DeepMind AI researcher, Dileep George, that OpenAI and a nonexistent GPT-5 played a role in the Silicon Valley Bank collapse. Eric Horvitz, Microsoft’s chief scientific officer and a leading AI researcher, told Matteo Wong that “the apparent AI revolution could not only provide a new weapon to propagandists, as social media did earlier this century, but entirely reshape the historiographic terrain, perhaps laying the groundwork for a modern-day Reichstag fire. [...] As this technology advances, piecemeal fabrications could give way to coordinated campaigns—not just synthetic media but entire synthetic histories, as Horvitz called them in a paper late last year. And a new breed of AI-powered search engines, led by Microsoft and Google, could make such histories easier to find and all but impossible for users to detect.”

Imagine QAnon as a coordinated synthetic media network with the power of Sinclair Broadcast Group deploying a firehose of falshoods with dark web puzzles like Cicada 3301 that offer crypto bounties for decoding info “drops,” infiltrating other digital communities, disinfo raiding forums, virally sharing memes, and carrying out real world revolutionary acts of freedom fighting — all in an attempt to build a future Network State.

Or, as Matt Wong suggested, consider “the raging bird-flu outbreak, which has not yet begun spreading from human to human. A political operative—or a simple conspiracist—could use programs similar to ChatGPT and DALL-E 2 to easily generate and publish a huge number of stories about Chinese, World Health Organization, or Pentagon labs tinkering with the virus, backdated to various points in the past and complete with fake “leaked” documents, audio and video recordings, and expert commentary. A synthetic history in which a government-weaponized bird flu would be ready to go if avian flu ever began circulating among humans. A propagandist could simply connect the news to their entirely fabricated—but fully formed and seemingly well-documented—backstory seeded across the internet, spreading a fiction that could consume the nation’s politics and public-health response. The power of AI-generated histories, Horvitz [said], lies in ‘deepfakes on a timeline intermixed with real events to build a story.’”

Tiffany Hsu and Stuart A. Thompson of the New York Times reported that “personalized, real-time chatbots could share conspiracy theories in increasingly credible and persuasive ways […] smoothing out human errors like poor syntax and mistranslations and advancing beyond easily discoverable copy-paste jobs. And [researchers] say that no available mitigation tactics can effectively combat it.”

Additionally, media manipulators employ a common tactic known as “source hacking” to target journalists and other influential public figures to pick up falsehoods and unknowingly amplify them to the public. For a while the scale and cunning of these manipulations have been relatively bound by the constraints of computation, the human body, and the human mind. This is no longer the case. We may see the capacity for AGI to link influencers across content types, distribution platforms, media outlets, and academic journals to employ networks of strategically coordinated manipulation that cause social unrest. Not only might it use LLMs to deploy social engineering bots, but also create engagement bots deploying generative text, images, videos, and more.

“This tool is going to be the most powerful tool for spreading misinformation that has ever been on the internet,” said Gordon Crovitz, a co-chief executive of NewsGuard, a company that tracks online misinformation and conducted the experiment last month. “Crafting a new false narrative can now be done at dramatic scale, and much more frequently — it’s like having A.I. agents contributing to disinformation.” (New York Times)

Attention is Sacred.

By the first week of April 2023 Gideon Lichfield already reported that, “the latest release of MidJourney has given us the viral deepfake sensations of Donald Trump’s ‘arrest’ and the Pope looking fly in a silver puffer jacket, which make it clear that you will soon have to treat every single image you see online with suspicion.” The Brookings Institute warns that memes “have been known to spread political messaging, alter the stock market, allow protest amidst censorship, and influence how we think about war” which may have significant impacts on social cohesion and democracy.

At nearly the same time, Stanford University’s 2023 Artificial Intelligence Index Report documented that “the number of incidents concerning the misuse of AI is rapidly rising. According to the AIAAIC database, which tracks incidents related to the ethical misuse of AI, the number of AI incidents and controversies has increased 26 times since 2012. Some notable incidents in 2022 included a deepfake video of Ukrainian President Volodymyr Zelenskyy surrendering and U.S. prisons using call-monitoring technology on their inmates. This growth is evidence of both greater use of AI technologies and awareness of misuse possibilities.”

While they found “policymaker interest in AI is on the rise,” Melissa Heikkilä at the MIT Technology Review, explains how “tech companies are racing to embed these models into tons of products to help people do everything from book trips to organize their calendars to take notes in meetings [...] Generative AI integration is launching across entire platform workspaces – email, docs, spreadsheets, slides, images – even slack, salesforce, and other various AI productivity integrations.”

All of this increases the scope, scale, and ease of criminal behaviors from malicious tasks like spam, doxxing and leaking private information to phishing, scamming, hacking, and social engineering. While OpenAI is looking to train its models to become more resilient to jailbreaking (video) and prompt injections aimed at tricking the model to pass it’s guardrails – a different chat bot already persuaded a young married father to commit suicide amidst eco-anxiety about climate change. The genie’s already out of the bottle. Heikki laments, “it’s a never-ending battle. For every fix, a new jailbreaking prompt pops up.”

Is this just a losing game of whack-a-mole?

And with all this good news someone just sent me ChaosGPT: Empowering GPT with Internet and memory to Destroy Humanity. Right now it feels more like artistic expression, a scifi horror joke, a social commentary on the consciousness of humanity, or perhaps a sad vengeful human who’s been hurt. But, what happens when it stops being a goof, a nerdy prank, a digital jump scare?

For me, it's yet another reminder of the ebbs and flows – of life and death – of bringing babies into a world where you Can’t Look Up. So, before I lay out the landscape of the AI Arms Race and teach you all how to build your own custom Cultural Nukes in my next article, I want to end this news-laden data dump with a reflection on dealing with death and clinging to hope from Stephen Jenkinson

“You know hope has got a very good PR firm working for it. Of course it does! And it's very hard for people to challenge the Mandate of Hope inherently [...] I thought it was fairly important to wonder at least about the consequences of being hopeful, when you're dying anyway. That's the caveat – when you're dying anyway.

So of course, as I said earlier, all these people were dying anyway and they were obliged by the people around them – including the lunatic cheerleading brigade known as their families – to maintain a degree of hopefulness, just in principle. That apparently, it just was good for you to be hopeful. And apparently, all of the literature indicates that hopeful people have better outcomes. I have no idea what better outcome means when you're dying anyhow, and then you're dead.

But, that's what they contended, so I simply observed what being hopeful – or the obligation to be hopeful – did to dying people. And for what it's worth, it's a bit anecdotal, but here it is… Almost by definition, it turned them away from their dying at the very time when dying was pleading like Grendel did for a way in — they turned away from it at the last possible moment, at the most grievous moment – and as a result died hopefully.

So, hope is, from everything I've been able to see, almost ludicrously oversold – as an enabler, as a facilitator, as a vaseline to make everything go down more readily. [...] And so there's a deferral about the present that hope [...] seems to require – but nobody's hopeful for the way it is [...] we seem to be hopeful for the way it isn't.” — Stephen Jenkinson “At Work in the Ruins” 56:33